For a project on transport data I needed access to the National Rail timetables to calculate passing points for trains at every station. The National Public Transport Data Repository (NPTDR) TransXChange data is available for download on the data.gov.uk site, but it’s easier to download from the Department for Transport site: http://data.dft.gov.uk/NPTDR/index.html

TransXChange is an XML format which supercedes the old “CIF” format files which were in a coded ASCII format. Once I had downloaded the October 2010 data, which is the latest available as this is only a yearly snapshot done in October, I then had to write some software to calculate all the passing points.

I’m using C#, so the first thing I did was to autogenerate a class matching the TransXChange schema. All the necessary schema files can be found at the following link: http://www.transxchange.org.uk/schema/schemas.htm My TransXChange data is using the 2.1 schema, so I downloaded that version.

Next, I used the following command to create a class for the schema using Visual Studio’s “xsd” tool:

[code]

xsd.exe -c -l:c# -n:TransXChangeXML

TransXChange_general.xsd TransXChange_common.xsd TransXChange_types.xsd TransXChange_registration.xsd

apd\AddressTypes-v1-3.xsd apd\BS7666-v1-3.xsd apd\CitizenIdentificationTypes-v1-3.xsd

apd\CommonSimpleTypes-v1-3.xsd apd\ContactTypes-v1-3.xsd apd\PersonalDetailsTypes-v1-3.xsd apd\PersonDescriptiveTypes-v1-0.xsd

[/code]

This generated a C# class called TransXChangeXML, although I do get lots of warnings about multiple definitions which I ignored.

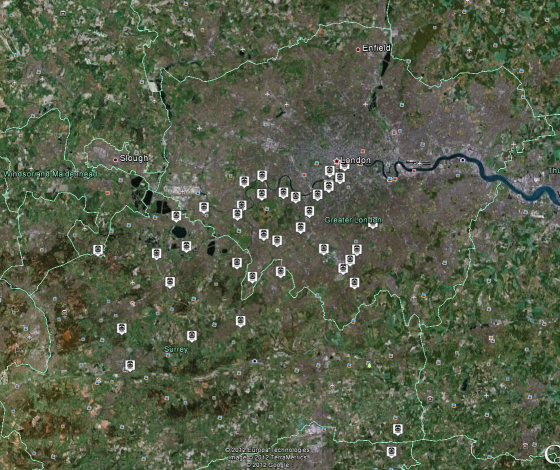

The next part involved deserialising the “ATCO_490_TRAIN.TXC” file into my class. I’m using area 490 which is Greater London, so I had to unpack the relevant zip files from the TransXChange download.

After reading the TransXChange manual for some time and some experimentation, I worked out that the method for calcualting passing points is as follows:

FOR EVERY “<Service>”

Get the JourneyPattern id from inside “<StandardService>”, which is the journey reference code (e.g. “JP8755”) and also the sequence code from the “<JourneyPatternSectionRefs>” (e.g. “SEQ12SEC11”)

Lookup the “<JourneyPatternRef>” code in the “<VehicleJourneys>/<VehicleJourney>” section. This gives us an absolute departure time and a list of “<JourneyPatternTimingLinkRef>” references (e.g. “SEC162POS95”) containing runtimes and wait times for the “from” and “to” links. These sequence positions are merged with ones from “<JourneyPatternSections>” looked up using the “SEQ_SEC_” reference from earlier. The vehicle timing links have preference over the journey pattern timing links.

FOR EVERY TimingLink from previous stage

Link Arrival Time = Previous Link Departure Time + RunTime

Link Departure Time = Arrival Time + Wait Time from end of previous link (“TO”)

The code for this is copied below:

[code language=”csharp”]

//read TransXChange file

XmlReaderSettings settings = new XmlReaderSettings();

settings.ConformanceLevel = ConformanceLevel.Fragment;

settings.IgnoreWhitespace = true;

settings.IgnoreComments = true;

using (XmlReader reader = XmlReader.Create(Filename, settings))

{

XmlSerializer SerializerObj = new XmlSerializer(typeof(TransXChangeXML.TransXChange));

TransXChangeXML.TransXChange TXC = (TransXChangeXML.TransXChange)SerializerObj.Deserialize(reader);

//Build a JourneyPattern index by the section cdoe

Dictionary JPS = new Dictionary();

foreach (TransXChangeXML.JourneyPatternSectionStructure JourneyPattern in TXC.JourneyPatternSections)

{

JPS.Add(JourneyPattern.id, JourneyPattern);

}

//build an index of vehicle journeys by the JP ref code so that we can look them up easily

Dictionary VehicleJourneyByJPRef = new Dictionary();

//VehicleJourneys have departure times

foreach (TransXChangeXML.VehicleJourneyStructure VehicleJourney in TXC.VehicleJourneys.VehicleJourney)

{

string VJourneyCode = VehicleJourney.VehicleJourneyCode;

DateTime VDepartureTime = VehicleJourney.DepartureTime;

string VOperatorRef = VehicleJourney.OperatorRef.Value; //e.g. SW=SW TRAINS

string JPRef = "";

if (VehicleJourney.Item is TransXChangeXML.VehicleJourneyRefStructure) JPRef = (VehicleJourney.Item as string);

else if (VehicleJourney.Item!=null) JPRef = (VehicleJourney.Item as TransXChangeXML.JourneyPatternRefStructure).Value; //this must be an xsd error? All this just to get the JP code?

//TODO: need an array of the duplicates indexed by JPRef

if (!string.IsNullOrEmpty(JPRef))

{

if (VehicleJourneyByJPRef.ContainsKey(JPRef))

{

//System.Diagnostics.Debug.WriteLine("Duplicate key: " + JPRef);

}

else

VehicleJourneyByJPRef.Add(JPRef, VehicleJourney);

}

}

//Now go through all the services, linking them up to the VehicleJourneys via their JP reference. The VehicleJourney contains the

//parent sequence of stops which is then overridden by any JourneyPatternSectionRefs contained in the JourneyPattern.

//Services only have relative timings on them, but link to VehicleJourneys through the JP ref, which gives us absolute times

foreach (TransXChangeXML.ServiceStructure Service in TXC.Services)

{

TransXChangeXML.StandardServiceStructure StandardService = Service.StandardService;

string SDDestination = StandardService.Destination.Value;

string SDOrigin = StandardService.Origin.Value;

//The parent service destination and origin only seem to be to do with the grouping and not something you would actually display

//System.Diagnostics.Debug.WriteLine("Service: Destination = " + SDDestination + " Origin = "+SDOrigin);

foreach (TransXChangeXML.JourneyPatternStructure JP in StandardService.JourneyPattern)

{

string JPRef = JP.id; //this is the JP number

if (JPRef == "JP8755") //This is Shepperton

{

string DestinationDisplay = JP.DestinationDisplay.Value; //this overrides the StandardService Destination

//lookup the journey using the JP code, which gives us a departure time and the section links which can be overridden

TransXChangeXML.VehicleJourneyStructure VehicleJourney = VehicleJourneyByJPRef[JPRef];

DateTime DepartureTime = VehicleJourney.DepartureTime;

//make a list of the timing link overrides from the VehicleJourneys which also gives us waiting times and additional runtimes

Dictionary VTimingLinks = new Dictionary();

if (VehicleJourney.VehicleJourneyTimingLink != null)

{

foreach (TransXChangeXML.VehicleJourneyTimingLinkStructure TimingLink in VehicleJourney.VehicleJourneyTimingLink)

{

VTimingLinks.Add(TimingLink.JourneyPatternTimingLinkRef.Value, TimingLink);

}

}

//get journey pattern timing links from SEQ SEC ref number

System.Diagnostics.Debug.WriteLine("Departure Time: "+DepartureTime + " Destination display: " + DestinationDisplay + " JP id=" + JP.id);

//now traverse the Journey accumulating a TimeDelta at each stop relative to the VehicleJourney Departure Time

TimeSpan TimeDelta = new TimeSpan();

TimeSpan FromWaitTime = new TimeSpan();

TimeSpan ToWaitTime = new TimeSpan();

foreach (TransXChangeXML.JourneyPatternSectionRefStructure SeqSecRef in JP.JourneyPatternSectionRefs)

{

System.Diagnostics.Debug.WriteLine("REF: " + SeqSecRef.Value);

TransXChangeXML.JourneyPatternSectionStructure JourneyPattern = JPS[SeqSecRef.Value]; //lookup JourneyPattern using SEQ SEC ref number

foreach (TransXChangeXML.JourneyPatternTimingLinkStructure TimingLink in JourneyPattern.JourneyPatternTimingLink)

{

//todo: need activity in here…

TimeSpan RunTime = ParseTime(TimingLink.RunTime);

FromWaitTime = ToWaitTime; //weird – wait time on end of last segment rolled around to start of this segment

ToWaitTime = new TimeSpan();

if (!string.IsNullOrEmpty(TimingLink.To.WaitTime)) ToWaitTime = ParseTime(TimingLink.To.WaitTime);

if (VTimingLinks.ContainsKey(TimingLink.id))

{

TransXChangeXML.VehicleJourneyTimingLinkStructure VTimingLink = VTimingLinks[TimingLink.id]; //VehicleTimingLink lookup

if (!string.IsNullOrEmpty(VTimingLink.RunTime)) RunTime = ParseTime(VTimingLink.RunTime);

if ((VTimingLink.To != null) && (!string.IsNullOrEmpty(VTimingLink.To.WaitTime)))

{

ToWaitTime = ParseTime(VTimingLink.To.WaitTime);

}

}

System.Diagnostics.Debug.WriteLine("Arrive: " + (DepartureTime+TimeDelta) + " Depart: " + (DepartureTime+TimeDelta+FromWaitTime)

+ " " + TimingLink.id + " " + TimingLink.From.StopPointRef.Value + " " + TimingLink.To.StopPointRef.Value

+" Runtime="+RunTime+" FromWaitTime="+FromWaitTime+" ToWaitTime="+ToWaitTime);

TimeDelta = TimeDelta + FromWaitTime + RunTime;

}

}

System.Diagnostics.Debug.WriteLine("Final arrival: "+(DepartureTime+TimeDelta));

}

}

}

}

[/code]

I’ve limited the code to only produce the passing points for a single service, “JP8755”, which is a Waterloo to Shepperton service. This produces the following output:

[code]

Departure Time: 01/01/0001 05:12:00 Destination display: Shepperton Rail Station JP id=JP8755

REF: SEQ12SEC11

Arrive: 01/01/0001 05:12:00 Depart: 01/01/0001 05:12:00 SEQ12POS88 9100WATRLMN 9100VAUXHLM Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:01:00

Arrive: 01/01/0001 05:15:00 Depart: 01/01/0001 05:16:00 SEQ12POS89 9100VAUXHLM 9100CLPHMJM Runtime=00:04:00 FromWaitTime=00:01:00 ToWaitTime=00:01:00

Arrive: 01/01/0001 05:20:00 Depart: 01/01/0001 05:21:00 SEQ12POS90 9100CLPHMJM 9100ERLFLD Runtime=00:03:00 FromWaitTime=00:01:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:24:00 Depart: 01/01/0001 05:24:00 SEQ12POS91 9100ERLFLD 9100WDON Runtime=00:04:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:28:00 Depart: 01/01/0001 05:28:00 SEQ12POS92 9100WDON 9100RAYNSPK Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:31:00 Depart: 01/01/0001 05:31:00 SEQ12POS93 9100RAYNSPK 9100NEWMLDN Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:34:00 Depart: 01/01/0001 05:34:00 SEQ12POS94 9100NEWMLDN 9100NRBITON Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:37:00 Depart: 01/01/0001 05:37:00 SEQ12POS95 9100NRBITON 9100KGSTON Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:40:00 Depart: 01/01/0001 05:40:00 SEQ12POS96 9100KGSTON 9100HAMWICK Runtime=00:02:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:42:00 Depart: 01/01/0001 05:42:00 SEQ12POS97 9100HAMWICK 9100TEDNGTN Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:45:00 Depart: 01/01/0001 05:45:00 SEQ12POS98 9100TEDNGTN 9100FULWELL Runtime=00:04:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:49:00 Depart: 01/01/0001 05:49:00 SEQ12POS99 9100FULWELL 9100HAMPTON Runtime=00:04:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:53:00 Depart: 01/01/0001 05:53:00 SEQ12POS100 9100HAMPTON 9100KMPTNPK Runtime=00:03:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:56:00 Depart: 01/01/0001 05:56:00 SEQ12POS101 9100KMPTNPK 9100SUNBURY Runtime=00:02:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 05:58:00 Depart: 01/01/0001 05:58:00 SEQ12POS102 9100SUNBURY 9100UHALIFD Runtime=00:02:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Arrive: 01/01/0001 06:00:00 Depart: 01/01/0001 06:00:00 SEQ12POS103 9100UHALIFD 9100SHEPRTN Runtime=00:05:00 FromWaitTime=00:00:00 ToWaitTime=00:00:00

Final arrival: 01/01/0001 06:05:00

[/code]

Comparing this with the South West Trains timetable for the service, I can check that all the arrival and departure times are correct. It’s worth pointing out that, while this data is an October 2010 timetable, the current timetable in operation hasn’t changed in that time. This code should also be treated with caution as it hasn’t been rigorously tested. As can be seen from some of the comments, there are parts of the TransXChange schema that I’ve ignored for the sake of simplicity, for example, the activity at the stop and whether it’s a “Dead Run”.

Now that I have a list of passing points for every station in Greater London, I can use the information to build a real-time train tracking system.