As part of the ESRC Festival of Social Science, CASA and Leeds University held a three day event at Leeds City museum called “Smart Cities: Bridging the Physical and Digital”. This took place on the 8th, 9th and 10th of November.

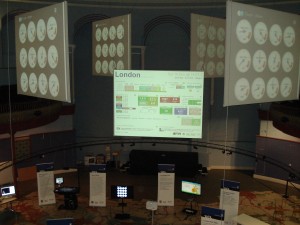

The museum’s central arena venue for the exhibition was a fantastic choice because of the domed roof and suspended overhead screens (see picture). There was also a map of the Leeds area set into the floor and a gallery on the first floor where people could look down on the exhibits and take pictures.

The timing of the event also coincided with a market in the square outside and a number of children’s events taking place in the museum on the Saturday, so we had plenty of visitors.

Although this was a follow-up to the Smart Cities event which we did in London in April, there were a number of additions and changes to exhibits. Firstly, we added a second pigeon sim which was centred on Leeds City museum, in addition to the London version centred on City Hall. Although we expected the Leeds one to be popular, people seemed to be fascinated by the London version and the fact that you could fly around all the famous landmarks. I spent a lot of time giving people directions to the Olympics site and pointing out famous places. Having watched a lot of people flying around London it might be interesting to see how it changes their spatial perception as a lot of people don’t realise how small some things are and how densely packed London is.

Both the pigeon sims use Google Earth, controlled via an XBox Kinect and its skeleton tracking. This has always worked very well in practice, but did require some height adjustment for the under fives. The image on the right also shows the riots table which uses another Kinect camera to sense Lego Police cars on the surface of the table. A model of the London riots runs on the computer and displays a map on the table which players use the Police cars to control. The Lego cars survived fairly well intact, despite being continually broken into pieces and lots of children enjoyed rebuilding them for us.

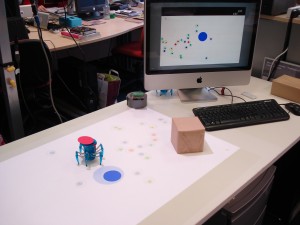

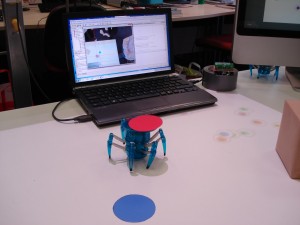

Another change to the London “Smart Cities” exhibition was the addition of the HexBug spiders to the Roving Eye exhibit. Previous posts have covered how a HexBug spider was modified to be controlled from a computer.

The original “Roving Eye” projected “eyeball” agents onto the table and used a Kinect camera to sense objects placed on the table which formed barriers. The addition of the HexBug spider adds a physical robot which moves around the table and can be detected by the Kinect camera, causing the eyeballs to avoid it. This exhibit is built from two totally separate systems, with the iMac, Kinect and projector running the Roving Eye processing sketch (left computer), while the Windows 7 machine (right) uses a cheap webcam, Arduino, OpenCV and modified HexBug transmitter to control the spider. This is an interesting mix of the “Bridging the Physical and Digital”, and there were a lot of discussions with visitors during the three days of the exhibition about crowd modelling in general.

Also new for the Leeds exhibition was the Survey Mapper Live exhibit, which allows people to vote in a Survey Mapper survey by standing in front of the screen and waving their hand over one of the four answers.

The question asked was about increased Leeds independence and over the course of the three days we received a good number of responses. The results will follow in another post once they have been analysed, but, for a first test of the system, this worked really well. The aim is to put something like this into a public space in the future.

Finally, the view from the gallery on the first floor shows the scale of the event and the size of the suspended screens.